How my research defined clear value proposition, decreasing drop rates

My research identified key adoption barriers and prioritized critical persona, transforming product and marketing strategy from emphasizing "build apps" to promoting peer-validated "use cases". This pivot led to a 25% increase in product adoption within a month.

Reading time : 4 min

Background

CreateAI is a SaaS platform that enables the Arizona State University (ASU) community to build custom AI experiences. As the product moved from Beta to Production, internal dashboards showed that usage was not growing as expected.

This posed a key risk: without stronger adoption, improved platform capabilities alone would not translate into real impact. Product and marketing teams needed to decide how to position CreateAI, who to prioritize, and what barriers to address before scaling broader adoption efforts.

Goal

To identify the key attitudinal, trust, and workflow barriers preventing faculty and staff from adopting CreateAI Builder, and determine where the product and messaging needed to change to drive sustained usage

My role

As the sole researcher on a small, early-stage product team, I established the UX research function from scratch. This included:

Creating foundational research processes, such as a participant repository, efficient recruitment methods and templates from the ground up.

Handling all aspects of research operations, including scheduling, participant management, consent, and data handling, in an environment without existing templates or processes.

Introducing AI in the research workflow, testing it and forming best practices for future research.

Handling post research design thinking workshops, creating visual deliverables for cross-functional teams based on their questions.

Methodology

Mixed-method UX research combining 20 user interviews and a survey with 125+ responses, exploring perceptions, motivations, and barriers impacting adoption.

Interviews to identify why adoption succeeds or fails and Surveys to test how common those patterns are.

Results

Findings directly informed marketing strategy, clarifying the target audience, channels, messaging, and approach. They also influenced product direction by highlighting the importance of clearly defining CreateAI Builder’s unique value proposition. The product strategy now is prioritizing existing applications over new features, and positioning the product distinctly from competitors—answering the critical user question of “Why CreateAI?”

25%

Increase in product adoption next month

15%

Reduction in drop rates next month

Let's dive deeper in how my research created CreateAI's USP

CreateAI gives ASU faculty and staff a safe, ASU‑governed way to build and use AI tools that fit their real workflows, saving time on repetitive tasks while keeping humans firmly in charge of judgment and student impact.

Timeline

Streamlining goal

The initial brief — “understand users, their motivations, and attitudes” — was broad and underspecified. To align stakeholders and clarify decision needs, I introduced a research intake process to surface the core problem, intended outcomes, and decisions this research needed to inform.

The data dashboards, meetings with stakeholders, customer tickets and other customer support channels helped me refine the goal and create a targeted research initiative.

Hypothesis

Overall attitudes and perceptions toward AI is the primary driver for low adoption.

Adoption is more likely when AI tools align with the most time-consuming parts of users’ workflows.

Low awareness is a major reason for low adoption of the product.

Recruiting

Faculty + Staff

Both CreateAI Builder users and non-users

Diverse roles with varied technical knowledge

I have formed strong relationships in ASU community that help me share surveys and interview requests without incentive. My messaging is personal and I maintain a high survey completion rate of > 35% and < 10% no show rate in interviews.

Relevant Slack channels

Personal network

Beta user group

Previous survey participants

Customer support channels

Regular reminder with strong messaging

User interviews - 18

Faculty + Staff

INTERVIEW GOALS

How people feel about AI (curiosity, skepticism, trust, hesitation)

Where AI fits—or fails to fit—into real workflows

What motivates continued use versus early drop-off

What kinds of support and clarity would make AI tools feel safe, useful, and worth adopting

OUTCOME

Insights from interviews directly informed

Persona development

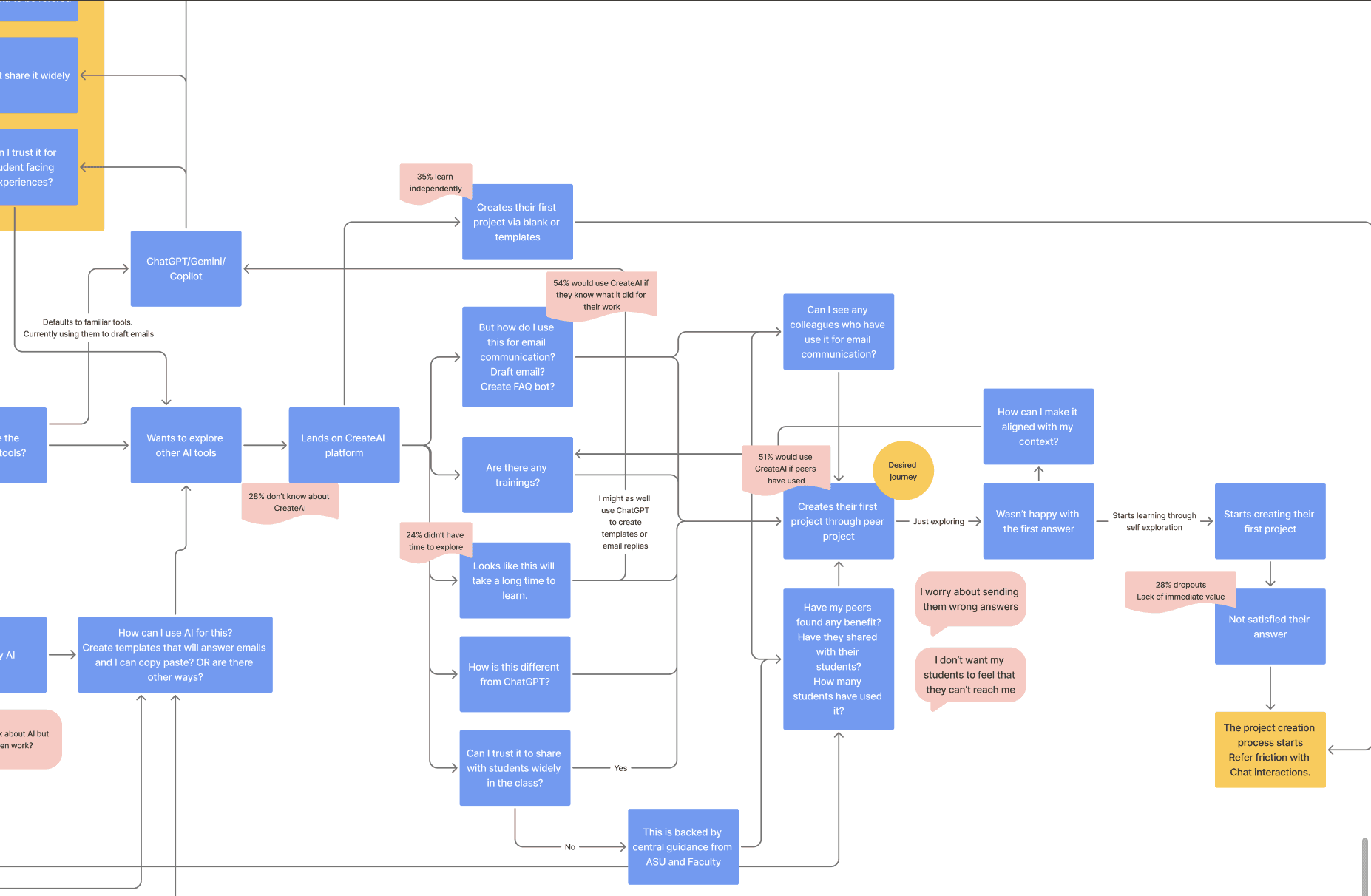

Decision making tree

Clarified adoption barriers

Marketing messaging

Shaped recommendations for CreateAI’s positioning, training, and feature prioritization

Screenshot of note taking template for user interview

User Survey

USER SURVEY GOALS

Validating Interview findings

Measuring spread of persona

Familiarity with AI/CreateAI and current usage

Where can CreateAI help the most

OUTCOME

Insights from surveys directly informed

Quantifying Persona distribution

Qualitative insights into actionable numeric evidence

Precise usage priorities

Drop-off reasons at scale

Measuring awareness and communication channels

Survey design logic

Analyzing process

A. Analyzing User Interviews

B. Analyzing User Surveys

C. Final synthesis

Step 1

Organizing transcripts, familiarizing with the data, performing initial coding and adding insights

I created an AI solution that helped me with organizing transcripts, quotes, formatting in a tabular format and with initial tagging.

Step 2

Clustering coding, cross-case analysis, and finding themes to validate hypothesis and answers for research questions.

Final themes were created here.

Step 4

Persona segments were then cross-analyzed against usage, motivation, and workflow-related questions.

This allowed me to compare how different AI mindsets experience and engage with CreateAI tools.

Step 6

The findings then were prioritized based on frequency and severity (amount of weightage given by the user.)

The findings were structured addressing to each stage of funnel as drop offs were measured at every stage.

Step 3

Identified AI attitudes profiles using a 5-item attitudinal scale.

Based on these responses, participants were grouped into distinct AI adoption personas.

Step 5

Data triangulation of qualitative data from user interviews and quantitative from surveys.

Provided richer, more compelling evidence by combining qualitative quotes with quantitative findings, increasing confidence in the final insights.

Major findings and Actions taken

Faculty and staff see AI as a productivity assistant, not a decision-maker.

Trust breaks down quickly around hallucinations, high-stake tasks, and student-facing use cases. This tension is caused majorly when AI is positioned as magic and can be used for everything.

All faculty mentioned that grading is the most time consuming task but only a few use AI for grading as is considered a high stake task.

80% mention that they understand AI and 63% have seen some productivity gains while 56% expressing strong reservations about AI in higher education.

Trust breaks down quickly around hallucinations, high-stake tasks, and student-facing use cases. This tension is caused majorly when AI is positioned as magic and can be used for everything.

All faculty mentioned that grading is the most time consuming task but only a few use AI for grading as is considered a high stake task.

80% mention that they understand AI and 63% have seen some productivity gains while 56% expressing strong reservations about AI in higher education.

Actions taken

Repositioned CreateAI as a co-pilot, not a replacement. Connected CreateAI to ASU values: access, equity, inclusion and presented as a student safe environment

Aligned the microcopy of marketing messages and in product content with user sentiment findings

Addressed fears like “AI replacing jobs” with realistic framing.

Actions taken

Repositioned CreateAI as a co-pilot, not a replacement. Connected CreateAI to ASU values: access, equity, inclusion and presented as a student safe environment

Aligned the microcopy of marketing messages and in product content with user sentiment findings

Addressed fears like “AI replacing jobs” with realistic framing.

Adoption is driven by task clarity & role-specific examples, not AI enthusiasm

64% users sit in the pragmatic middle, who don’t struggle with what AI is — they struggle with what it’s for them. Adoption increases when AI is tied directly to a concrete, time-consuming task in the user’s workflow.

54% would use CreateAI if they knew what it did for their role. 51% would use if their peers have used it and have reported success.

Actions taken

The product strategy pivoted from use CreateAI to "Build apps" to create using "Built apps"

Recommended role-based starter templates aligned to top tasks

Showcasing peer projects and highlighting them on the platform

The preferred AI use cases highlighted in research were used to create templates and messaging. The team shifted from platform-first to use-case-first messaging

Users need a Straightforward tool that doesn’t take much Time to learn, has clear Purpose and creates Value

Users need a clear "Why CreateAI" when comparing with other tools. Users associate brainstorming to Chatgpt and synthesizing documents to NotebookLM, similarly CreateAI needs to establish it's clear positioning and differentiation.

63% would use CreateAI if they had more time to explore. Users defined value and success metrics as time saved, students supported, emails generated, idea creation help.

“A tool has to solve a problem for me, like speeding up grading or student engagement, to motivate me.”

Actions taken

Design focused on showing early value to users on the platform as well as for marketing messages

Team focused on gathering success metrics from current users. What success metrics will be most impactful was found from the research done.

Defined CreateAI's (UVP) Unique Value Proposition based on the research findings. The UVP is in the below image.

What users care about?

Trust, Time savings, clarity of use, control over student impact, peer validation

What competitors do really well?

Flexible chat, fast generic value, habit + brand

What CreateAI does really well?

ASU-governed environment, workflow tool builder, human-in-the-loop, role-based templates, peer gallery

CreateAI gives ASU faculty and staff a safe, ASU‑governed way to build and use AI tools that fit their real workflows, saving time on repetitive tasks while keeping humans firmly in charge of judgment and student impact.

Challenges

Recruited survey and interview participants without incentives or an existing user database

Led early career team members with varying availability and no established process

Defined research direction from scratch due to unclear goals and fragmented org documentation

Delivered rapid research to inform product marketing on a tight one-month timeline

Built research processes and safe, repeatable AI-in-workflow templates from the ground up

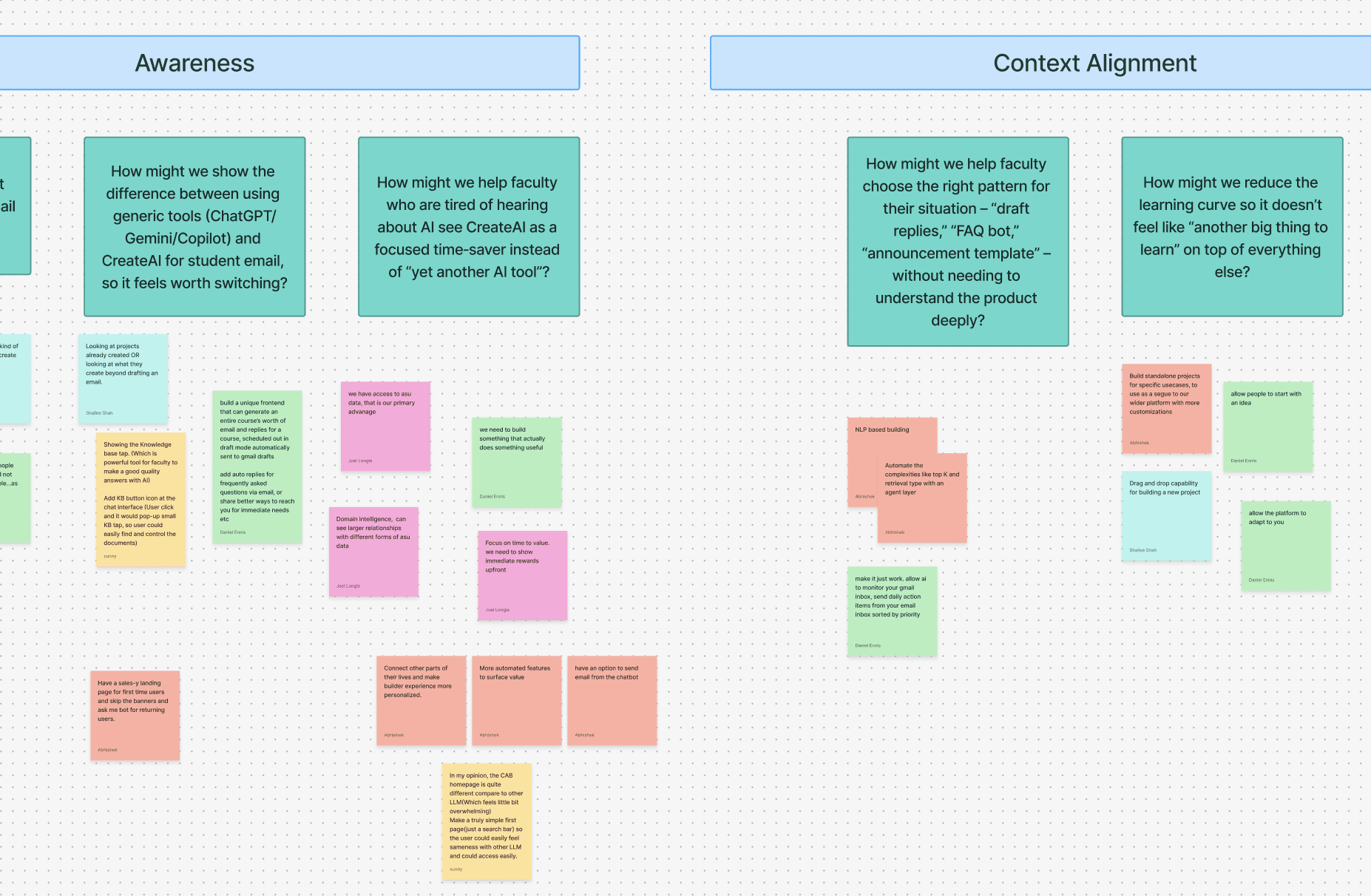

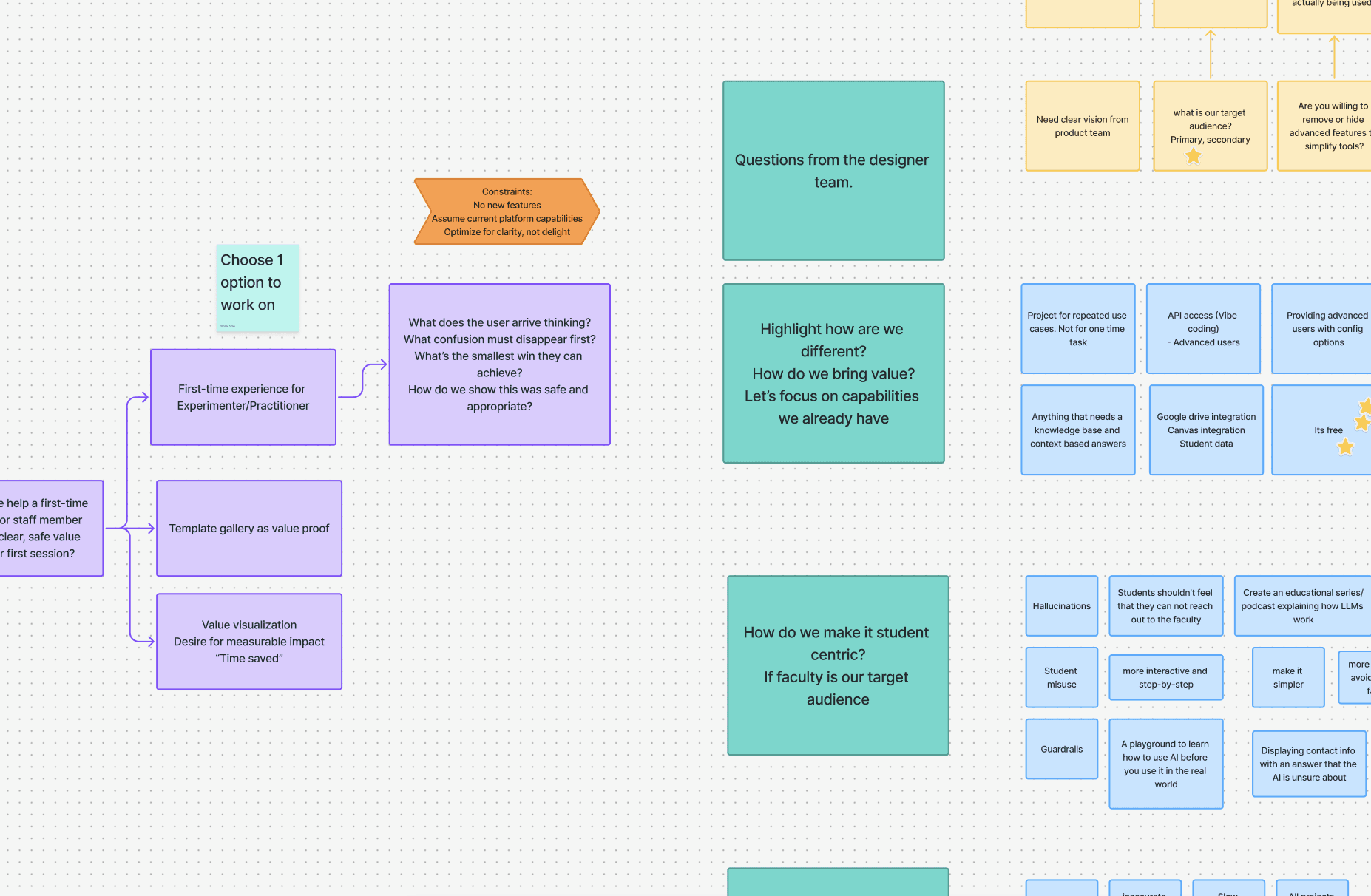

Post research

Currently, conducting prioritization workshops with stakeholders and design ideation session with designers.

Micro user journeys

Creating How might we statements

Focusing on one statement

Currently, conducting prioritization workshops with stakeholders and design ideation session with designers.

Micro user journeys

Creating How might we statements

Focusing on one statement

LET'S CONNECT

I am everything design! I am resilient to the core.

Get in touch to find out more about how I create digital experiences to effectively reach and engage audiences.

Email: shailees0406@gmail.com

Phone: +1 (602) 576-4588

Adoption is driven by task clarity & role-specific examples, not AI enthusiasm

64% users sit in the pragmatic middle, who don’t struggle with what AI is — they struggle with what it’s for them. Adoption increases when AI is tied directly to a concrete, time-consuming task in the user’s workflow.

54% would use CreateAI if they knew what it did for their role. 51% would use if their peers have used it and have reported success.

Actions taken

The product strategy pivoted from use CreateAI to "Build apps" to create using "Built apps"

Recommended role-based starter templates aligned to top tasks

Showcasing peer projects and highlighting them on the platform

The preferred AI use cases highlighted in research were used to create templates and messaging. The team shifted from platform-first to use-case-first messaging

Users need a Straightforward tool that doesn’t take much Time to learn, has clear Purpose and creates Value

Users need a clear "Why CreateAI" when comparing with other tools. Users associate brainstorming to Chatgpt and synthesizing documents to NotebookLM, similarly CreateAI needs to establish it's clear positioning and differentiation.

63% would use CreateAI if they had more time to explore. Users defined value and success metrics as time saved, students supported, emails generated, idea creation help.

“A tool has to solve a problem for me, like speeding up grading or student engagement, to motivate me.”

Actions taken

Design focused on showing early value to users on the platform as well as for marketing messages

Team focused on gathering success metrics from current users. What success metrics will be most impactful was found from the research done.

Defined CreateAI's (UVP) Unique Value Proposition based on the research findings. The UVP is in the below.

How my research defined clear value proposition, decreasing drop rates

My research identified key adoption barriers and prioritized critical persona, transforming product and marketing strategy from emphasizing "build apps" to promoting peer-validated "use cases". This pivot led to a 25% increase in product adoption within a month.

Reading time : 2 min

Background

CreateAI is a SaaS platform that enables the Arizona State University (ASU) community to build custom AI experiences. As the product moved from Beta to Production, internal dashboards showed that usage was not growing as expected — particularly among faculty, a critical user segment.

This posed a key risk: without stronger adoption, improved platform capabilities alone would not translate into real impact. Product and marketing teams needed to decide how to position CreateAI, who to prioritize, and what barriers to address before scaling broader adoption efforts.

Goal

To identify the key attitudinal, trust, and workflow barriers preventing faculty and staff from adopting CreateAI Builder, and determine where the product and messaging needed to change to drive sustained usage

My role

As the sole researcher on a small, early-stage product team, I established the UX research function from scratch. This included:

Creating foundational research processes, such as a participant repository, efficient recruitment methods and templates from the ground up.

Handling all aspects of research operations, including scheduling, participant management, consent, and data handling, in an environment without existing templates or processes.

Introducing AI in the research workflow, testing it and forming best practices for future research.

Methodology

Mixed-method UX research combining 20 user interviews and a survey with 125+ responses, exploring perceptions, motivations, and barriers impacting adoption.

Interviews to identify why adoption succeeds or fails and Surveys to test how common those patterns are.

Results

Findings directly informed marketing strategy, clarifying the target audience, channels, messaging, and approach. They also influenced product direction by highlighting the importance of clearly defining CreateAI Builder’s unique value proposition. The product strategy now is prioritizing existing applications over new features, and positioning the product distinctly from competitors—answering the critical user question of “Why CreateAI?”

25%

Increase in product adoption next month

15%

Reduction in drop rates next month

Let's dive deeper in how my research created CreateAI's USP

CreateAI gives ASU faculty and staff a safe, ASU‑governed way to build and use AI tools that fit their real workflows, saving time on repetitive tasks while keeping humans firmly in charge of judgment and student impact.

Timeline

Streamlining goal

The initial brief — “understand users, their motivations, and attitudes” — was broad and underspecified. To align stakeholders and clarify decision needs, I introduced a research intake process to surface the core problem, intended outcomes, and decisions this research needed to inform.

The data dashboards, meetings with stakeholders, customer tickets and other customer support channels helped me refine the goal and create a targeted research initiative.

Hypothesis

Overall attitudes and perceptions toward AI is the primary driver for low adoption.

Adoption is more likely when AI tools align with the most time-consuming parts of users’ workflows.

Low awareness is a major reason for low adoption of the product.

Recruiting

Faculty + Staff

Both CreateAI Builder users and non-users

Diverse roles with varied technical knowledge

I have formed strong relationships in ASU community that help me share surveys and interview requests without incentive. My messaging is personal and I maintain a high survey completion rate of > 35% and < 10% no show rate in interviews.

Relevant Slack channels

Personal network

Beta user group

Previous survey participants

Customer support channels

Regular reminder with strong messaging

User interviews - 18

Faculty + Staff

INTERVIEW GOALS

How people feel about AI (curiosity, skepticism, trust, hesitation)

Where AI fits—or fails to fit—into real workflows

What motivates continued use versus early drop-off

What kinds of support and clarity would make AI tools feel safe, useful, and worth adopting

OUTCOME

Insights from interviews directly informed

Persona development

Decision making tree

Clarified adoption barriers

Marketing messaging

Shaped recommendations for CreateAI’s positioning, training, and feature prioritization

Screenshot of note taking template for user interview

User Survey

USER SURVEY GOALS

Validating Interview findings

Measuring spread of persona

Familiarity with AI/CreateAI and current usage

Where can CreateAI help the most

OUTCOME

Insights from surveys directly informed

Quantifying Persona distribution

Qualitative insights into actionable numeric evidence

Precise usage priorities

Drop-off reasons at scale

Measuring awareness and communication channels

Survey design logic

Step 1

Organizing transcripts, familiarizing with the data, performing initial coding and adding insights

I created an AI solution that helped me with organizing transcripts, quotes, formatting in a tabular format and with initial tagging.

Step 2

Clustering coding, cross-case analysis, and finding themes to validate hypothesis and answers for research questions.

Final themes were created here.

Step 3

Identified AI attitudes profiles using a 5-item attitudinal scale.

Based on these responses, participants were grouped into distinct AI adoption personas.

Step 4

Persona segments were then cross-analyzed against usage, motivation, and workflow-related questions.

This allowed me to compare how different AI mindsets experience and engage with CreateAI tools.

Step 5

Data triangulation of qualitative data from user interviews and quantitative from surveys.

Provided richer, more compelling evidence by combining qualitative quotes with quantitative findings, increasing confidence in the final insights.

Step 6

The findings then were prioritized based on frequency and severity (amount of weightage given by the user.)

The findings were structured addressing to each stage of funnel as drop offs were measured at every stage.

CreateAI gives ASU faculty and staff a safe, ASU‑governed way to build and use AI tools that fit their real workflows, saving time on repetitive tasks while keeping humans firmly in charge of judgment and student impact.

What users care about?

Trust, Time savings, clarity of use, control over student impact, peer validation

What CreateAI does really well?

ASU-governed environment, workflow tool builder, human-in-the-loop, role-based templates, peer gallery

What competitors do really well?

Flexible chat, fast generic value, habit + brand

CreateAI UVP

CreateAI gives ASU faculty and staff a safe, ASU‑governed way to build and use AI tools that fit their real workflows, saving time on repetitive tasks while keeping humans firmly in charge of judgment and student impact.

LET'S CONNECT

I am everything design! I am resilient to the core.

Get in touch to find out more about how I create digital experiences to effectively reach and engage audiences.

Email: shailees0406@gmail.com

Phone: +1 (602) 576-4588

LET'S CONNECT

I am everything design! I am resilient to the core.

Get in touch to find out more about how I create digital experiences to effectively reach and engage audiences.

Email: shailees0406@gmail.com

Phone: +1 (602) 576-4588

Challenges?

Recruited survey and interview participants without incentives or an existing user database

Led early career team members with varying availability and no established process

Defined research direction from scratch due to unclear goals and fragmented org documentation

Delivered rapid research to inform product marketing on a tight one-month timeline

Built research processes and safe, repeatable AI-in-workflow templates from the ground up